With all of the troubles in this modern world, I strongly feel it’s important that each of us help others who happen to be less fortunate than ourselves. I have personally been on both sides of this issue in my life, and I know that this life can be difficult, happy, joyful, or seemingly impossible at times. A close friend recently reminded me of this. Here are 20 inspiring Bible verses for all of the Christians out there, (You know who You are). Even if you aren’t a Christian, you may find that you might agree with the philosophy or spirit of these sayings – It certainly can’t be a bad thing to help your fellow human beings when they need it most…

**1) Psalm 34:6**

This poor man called, and the Lord heard him;

he saved him out of all his troubles.

**2) Psalm 35:10**

My whole being will exclaim, “Who is like you, Lord?

You rescue the poor from those too strong for them,

the poor and needy from those who rob them.”

**3) Psalm 82:3**

Defend the weak and the fatherless;

uphold the cause of the poor and the oppressed.

**4) Psalm 109:30-31**

With my mouth I will greatly extol the Lord;

in the great throng of worshipers I will praise him.

For he stands at the right hand of the needy,

to save their lives from those who would condemn them.

**5) Psalm 113:7**

He raises the poor from the dust and lifts the needy from the ash heap;

**6) Psalm 140:12**

I know that the Lord secures justice for the poor

and upholds the cause of the needy.

**7) Psalm 146:8**

The Lord gives sight to the blind,

the Lord lifts up those who are bowed down,

the Lord loves the righteous.

**8) Proverbs 14:31**

Whoever oppresses the poor shows contempt for their Maker,

but whoever is kind to the needy honors God.

**9) Proverbs 14:21**

It is a sin to despise one’s neighbor,

but blessed is the one who is kind to the needy.

**10) Proverbs 19:17**

Whoever is kind to the poor lends to the Lord,

and he will reward them for what they have done.

**11) Proverbs 22:9**

The generous will themselves be blessed,

for they share their food with the poor.

**12) Proverbs 28:27**

Those who give to the poor will lack nothing,

but those who close their eyes to them receive many curses.

**13) Proverbs 31:8-9**

Speak up for those who cannot speak for themselves,

for the rights of all who are destitute.

Speak up and judge fairly;

defend the rights of the poor and needy.

**14) Proverbs 31:20**

She opens her arms to the poor

and extends her hands to the needy.

**15) Isaiah 58:6-8**

“Is not this the kind of fasting I have chosen:

to loose the chains of injustice

and untie the cords of the yoke,

to set the oppressed free

and break every yoke?

Is it not to share your food with the hungry

and to provide the poor wanderer with shelter—

when you see the naked, to clothe them,

and not to turn away from your own flesh and blood?

Then your light will break forth like the dawn,

and your healing will quickly appear;

then your righteousness will go before you,

and the glory of the Lord will be your rear guard.

**16) Micah 6:8**

He has shown you, O mortal, what is good.

And what does the Lord require of you?

To act justly and to love mercy

and to walk humbly with your God.

**17) Zechariah 7:8-10**

And the word of the Lord came again to Zechariah: “This is what the Lord Almighty said: ‘Administer true justice; show mercy and compassion to one another. Do not oppress the widow or the fatherless, the foreigner or the poor. Do not plot evil against each other.’

**18) Luke 3:11**

John answered, “Anyone who has two shirts should share with the one who has none, and anyone who has food should do the same.”

**19) 1 John 3:16-18**

This is how we know what love is: Jesus Christ laid down his life for us. And we ought to lay down our lives for our brothers and sisters. If anyone has material possessions and sees a brother or sister in need but has no pity on them, how can the love of God be in that person? Dear children, let us not love with words or speech but with actions and in truth.

**20) James 1:27**

Religion that God our Father accepts as pure and faultless is this: to look after orphans and widows in their distress and to keep oneself from being polluted by the world.

Blog

-

20 Bible Verses About Helping the Poor

-

“Trixie” Available for Raspberry Pi

In case you missed it, Raspberry Pi OS 13, “Trixie” is now available for download! Please see here for details: https://www.raspberrypi.com/news/trixie-the-new-version-of-raspberry-pi-os/.

If you are running the Hailo AI on your Raspberry Pi, make sure to wait until the new libraries are out before you make the switch!

-

OpenSCAN

OpenScan is an open-source, 3D scanner software that allows users to create detailed digital models of objects from laser-scanned data or photogrammetry images. It’s a versatile tool for a variety of applications, including:

- Reverse engineering: Create accurate digital models of mechanical parts, products, or artifacts.

- Product design: Use scanned data to inform product design, prototyping, and manufacturing.

- Archaeology and heritage conservation: Record and document cultural heritage sites, monuments, or artifacts for preservation and research purposes.

- Industrial inspection: Monitor equipment condition, detect defects, and optimize maintenance schedules using scanned data.

- Scientific research: Capture detailed 3D models of samples, specimens, or objects in various scientific fields (e.g., biology, medicine, geology).

Some key features of OpenScan include:

- Multi-sensor support: Handle data from various 3D scanning technologies (laser scanners, structured light scanners, photogrammetry cameras).

- Advanced algorithms: Employ techniques like ICP (Iterative Closest Point), RANSAC (Random Sample Consensus), and Delaunay triangulation for accurate model reconstruction.

- User-friendly interface: Intuitive graphical user interface (GUI) makes it easy to navigate and process scanned data.

- Customization: Users can modify or create their own plugins, filters, or scripts using Python scripting capabilities.

While OpenScan is an impressive tool with a dedicated community, keep in mind that its complexity and steep learning curve might require some experience with 3D scanning, programming, or computer-aided design (CAD) software.

-

Debian 13 “Trixie” Released Today!

WooHoo! Debian 13, codename “Trixie” is released today, with all sorts of new goodness to play with! Here is the Press Release, the Release Notes, and a Direct Link for to get the goodness that is Debian! Also, here is How to Upgrade to Trixie for all of you that already “Know the Love”!

-

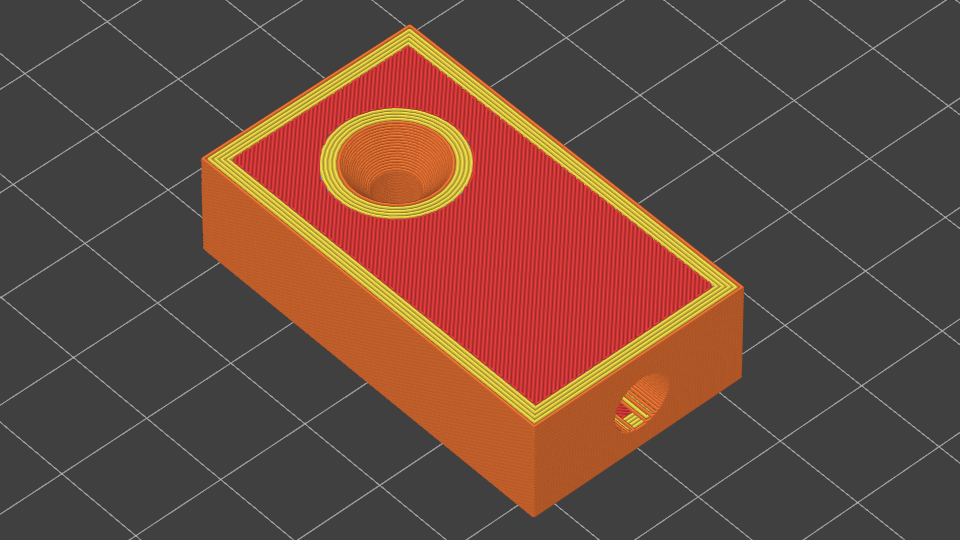

Raised Bed Anchor Block for Garden Hoops

Here is a Raised Bed Anchor Block for Garden Hoops design I made just the other day for my Wife, which fits this Garden Hoop Kit from Amazon. It’s a simple, yet sturdy design – just screw them into the side of your raised garden bed flush with the top board, put the hoop ends into the holes, and assemble the remaining parts of your hoops!

It’s overall dimensions are 44.5mm Long, 25.4mm wide, and 12mm tall. It has a 6.35mm wide by 22mm deep hole for the hoop end to fit into, and accepts a standard #8 1⅝” Deck Screw of your choosing. The downloadable zip file contains both the 3MF and STL, so if you need to customize the design for your needs, just edit the necessary bits in the 3MF file, and export it as an STL using your favorite design software.

Made with OpenSCAD, and Prusa-Slicer.

Printer/Slicer Settings:

Horizontal Shells – 5 Top, and 5 Bottom.

Vertical Shells – 5 Perimeters

Recommended Infill – 50%, your choice of pattern, I use Grid or Gyroid.

Recommended Material: I used HatchBox Rapid PETG in Dark Green.

I will put it up on Thingiverse, and Printables, for anyone who wants to print it for themselves or add it to their collections. It can also be found on Etsy in my shop (Coming Soon) if you want me to make it for you.

Here are the links:

- My Etsy Shop – Coming Soon

- Printables

- Thingiverse

-

We’re Not Ready for Superintelligence

Saw a great video on YouTube this morning, regarding humanity’s relationship with AI, and where things might be headed. Please check out this video: We’re Not Ready For Superintelligence.

-

My Journey with OpenWebui

July 4, 2025:

Today, I am starting my journey using OpenWebUI, a self hosted AI platform.

I will be adding to this post as I go along – sharing in the discovery, failures, learning, frustration, and successes of hosting my own local AI.

July 16, 2025:

Hosting your own AI has many benefits:

Hosting your own local Artificial Intelligence (AI) solution can bring several benefits to individuals, organizations, and communities. Here are some advantages, according to my OpenWebUI instance using the LLM model Llama3.1(8B):

- Improved Data Privacy: By hosting AI locally, you maintain control over the data and ensure it remains within your premises or a secure, trusted location. This minimizes the risk of data breaches, cyber attacks, and unauthorized access.

- Enhanced Security: Local AI deployment reduces the attack surface for malicious actors to target, as there’s no need to transmit sensitive data to the cloud. You can implement robust security measures to protect your system from vulnerabilities.

- Increased Performance: Hosting AI locally allows for faster processing times and reduced latency, especially when dealing with large datasets or real-time applications. This is particularly beneficial in applications like autonomous vehicles, drones, or smart cities.

- Reduced Dependence on the Cloud: By having a local AI infrastructure, you’re less reliant on cloud services, which can be vulnerable to outages, downtime, or changes in their terms of service.

- Customization and Flexibility: Local AI hosting enables you to tailor the solution to your specific needs and requirements. You can modify the architecture, algorithms, and models to suit your organization’s unique circumstances.

- Cost Savings: While initial investment costs may be higher for local AI deployment, they can lead to long-term cost savings by reducing cloud service fees, data transmission costs, and potential penalties for non-compliance with regulations.

- Regulatory Compliance: By hosting AI locally, you may be better positioned to comply with specific regulatory requirements, such as data residency laws, GDPR, or CCPA, which mandate the storage of sensitive data within a country’s borders.

- Edge Computing Benefits: Local AI deployment enables edge computing applications, where processing happens closer to the source of the data (e.g., IoT devices). This reduces latency and improves real-time decision-making capabilities.

- Disaster Recovery and Business Continuity: With a local AI infrastructure in place, you can ensure business continuity during outages or disasters by switching to a secondary system or location.

- Research and Development Opportunities: Hosting local AI solutions creates opportunities for research and development (R&D) within your organization, allowing you to explore new applications, models, and techniques.

Usefully Fast:

Mind you, that response took only 13 seconds for my AI rig to take my prompt, which was “Benefits of hosting your own local AI”, to think about it, and then write this output for me (and you) in a clean, easy to read format. It also gave me “Follow up” questions like these:

Follow up

What are some common challenges or considerations when implementing a local AI solution?

Can you provide more information on the hardware requirements for hosting a local AI solution?

How do I choose the right AI framework or platform for my specific needs?

Thoughts:

As you can see, you get a lot of information and suggestions for follow up questions pretty quickly. That’s not too shabby for a 6 year old computer I cobbled together from spare parts, using a currently “normal” sized LLM. You can get information on an incredible range of subjects in a clear, concise output which you can use to help with your workflows and/or thought processes without spending too much money. From what I have learned, the most important part is to have a good GPU (Graphics Processing Unit) to do the work quickly, as AI speed is mostly determined by GPU, not CPU. Just to save you the time and hassle of doing the research yourself, here is an Amazon link to the GPU I’m using for my AI rig: Nvidia 3060 12GB OC Edition. It is a cost-effective modestly priced GPU with quite a bit of VRAM, which is (primarily) what Open WebUI uses to process your requests, and return the outputs.

-

So, Here Goes…

This is our WordPress site, where we will put stuff we want to post/share, in hopes that some of you fine folks on the Interwebs will find something entertaining, informative, or useful.

If you are interested in 3D-Printing, Linux, Open Source Software, Self-Hosted AI, or just Technology in general, you are in the right place.

We’ll try to keep things as simple as possible, since it is getting harder and harder to keep up with the complexities of life in this modern world.

So, without further adieu, onward, and upward!